Global Memory & High-bandwidth Memory (HBM) Market: Technology Advancements, Growth Opportunities, and Industry Trends 2025–2030

The Global Memory & High-bandwidth Memory (HBM) Market is analyzed in this report across type, bandwidth level, end-use, and region, highlighting major trends and growth forecasts for each segment.

Let's Get You Started!

Fill out the quick form below and our academic advisor will connect with you shortly.

- ICT & Semiconductors

The Global Memory & High-bandwidth Memory (HBM) Market is analyzed in this report across type, bandwidth level, end-use, and region, highlighting major trends and growth forecasts for each segment.

Introduction:

Memory and High-Bandwidth Memory (HBM) technologies are transforming the architecture of modern computing across a wide range of industries. These next-generation memory solutions are essential to speeding up data-intensive workloads and enabling faster processing, particularly in high-performance computing (HPC), artificial intelligence (AI), graphics, and data center operations. HBM, with its vertically stacked die design and significantly higher bandwidth than conventional memory modules, is redefining the boundaries of memory performance.

The global Memory & HBM market is undergoing rapid expansion, fueled by a surge in AI workloads, scaling cloud infrastructure, increasing use of autonomous systems, and growing demand for real-time data processing at the edge. Valued at approximately USD 2.5 billion in 2024, the market is forecast to grow at a CAGR of 15%, reaching an estimated USD 100.7 billion by 2030. As technologies such as generative AI, quantum computing, and 5G evolve, the need for high-speed, energy-efficient, and compact memory solutions will intensify—cementing HBM’s role as a foundational element of future computing.

Market Dynamics:

The global Memory & High-Bandwidth Memory (HBM) market is experiencing strong momentum, driven by rising performance demands and rapid advancements in computing architectures. Growth is propelled by the proliferation of data-heavy applications—including AI, machine learning, 5G, and advanced gaming—that require higher memory bandwidth and lower latency. The industry is moving beyond traditional DRAM to embrace HBM and other advanced memory formats, unlocking gains in speed, energy efficiency, and scalability. Key enablers include the adoption of 2.5D and 3D integration techniques, particularly those leveraging Through-Silicon Vias (TSVs), which are instrumental in delivering compact, high-throughput solutions for GPUs, data accelerators, and AI processors.

Opportunities for expansion are substantial, especially in areas requiring power-efficient memory in data centers, the integration of HBM into autonomous and edge computing systems, and the deployment of high-speed memory for real-time analytics in sectors such as finance, healthcare, and automotive. HBM is becoming increasingly critical to the design of next-gen AI accelerators and quantum computing platforms, where memory bandwidth and power constraints directly affect system performance.

Key trends shaping the landscape include the transition to HBM3 and HBM3E standards, increased use of chiplet and memory stacking architectures, and a shift toward domain-specific memory customization. Notable developments include Micron’s launch of a 288 GB HBM3E memory module for AMD’s MI350 accelerators, reflecting a strong push toward AI-optimized, high-capacity memory. Samsung’s unveiling of a 12-stack, 36 GB HBM3E built with advanced TSVs highlights the industry’s move toward deeper stacking and enhanced bandwidth. Meanwhile, collaboration is intensifying across semiconductor foundries, OSAT providers, and chipmakers to refine 2.5D/3D packaging. Performance tuning efforts are increasingly focused on thermal efficiency, signal integrity, and memory-controller design—ensuring HBM and related memory technologies can keep pace with the rapidly scaling demands of modern compute infrastructure.

Segment Highlights and Performance Overview:

By Type

Graphics Processing Units (GPUs) remain the dominant deployment environment for HBM, capturing approximately 55% of the market by type. GPUs are foundational to AI model training, deep learning, advanced gaming, and rendering workloads—all of which demand ultra-fast data throughput and minimal latency. HBM adoption in GPUs continues to rise as both enterprise and consumer markets push for higher-performance AI and graphics capabilities.

By Bandwidth Level

The 256–512 GB/s bandwidth segment accounts for the largest share at roughly 48%, aligning with the standard specifications of HBM2 and HBM2E currently integrated into next-gen processors and accelerators. This bandwidth range offers a compelling balance of performance and cost-efficiency, making it a preferred choice across use cases such as machine learning, scientific computing, and high-end gaming systems.

By End-Use

Data Centers lead the end-use segment with around 42% market share. The sharp increase in AI and big data workloads is accelerating the deployment of HBM-enabled systems across cloud providers, hyperscalers, and enterprise IT environments. These organizations are leveraging HBM-integrated GPUs and AI chips to boost memory access speeds, reduce power draw, and enhance overall computational throughput—solidifying the segment’s leadership position.

Geographical Analysis:

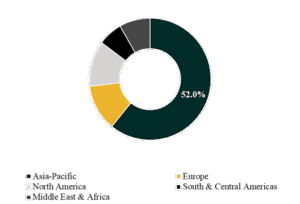

The global Memory & HBM market spans five key regions: North America, Europe, Asia-Pacific, South & Central America, and the Middle East & Africa.

Asia-Pacific commands the largest regional share, estimated between 47% and 52%, owing to its stronghold in semiconductor manufacturing, advanced packaging capabilities, and high-density memory production. The region’s integration of high-performance computing across telecommunications, consumer electronics, and industrial automation sectors further strengthens its position as the market leader.

North America, however, is projected to register the fastest growth, with a forecast CAGR of 17% to 19%. This acceleration is supported by rising AI workloads, expanding cloud infrastructure, and increasing investment in high-bandwidth, low-latency memory to power next-generation data centers and compute platforms.

Competition Landscape:

The Memory & HBM market is highly competitive, driven by a tight race among top-tier semiconductor players, chip designers, and packaging solution providers. Industry leaders are investing heavily in R&D, forging partnerships, and advancing memory architectures to maintain technological leadership in a fast-evolving market.

Key companies profiled in this space include:

Samsung Electronics Co., Ltd., SK hynix Inc., Micron Technology, Inc., Intel Corporation, Advanced Micro Devices, Inc., NVIDIA Corporation, Taiwan Semiconductor Manufacturing Company Limited (TSMC), ASE Technology Holding Co., Ltd., Rambus Inc., and Marvell Technology, Inc.

These players are at the forefront of innovation in HBM production, advanced integration, and next-generation packaging—each contributing to the rapid evolution of memory technologies that underpin modern compute performance.

Recent Developments:

- In June 2025, Advanced Micro Devices, Inc. introduced the MI350 accelerators, featuring 288 GB HBM3E chips developed by Samsung and Micron. This marks a new milestone in AI hardware capacity, setting a higher benchmark for ultra-high-bandwidth memory and driving increased competition among HBM suppliers targeting AI and HPC markets.

- On March 18, 2025, during GTC, NVIDIA Corporation launched the Blackwell Ultra DGX SuperPOD, equipped with 72 HBM3E GPUs per system, and B300 systems boasting 2.3 TB of HBM3E. These advancements are fast-tracking HBM adoption in AI data centers and intensifying market rivalry among memory manufacturers.

Segmentations:

By Type:

- Graphics Processing Units (GPUs)

- Central Processing Units (CPUs)

- Others

By Bandwidth Level:

- Up to 256 GB/s

- 256–512 GB/s

- Above 512 GB/s

By End-Use:

- Graphics

- High-performance Computing

- Networking

- Data Centers

- Others

Companies:

- Samsung Electronics Co., Ltd.

- SK hynix Inc.

- Micron Technology, Inc.

- Intel Corporation

- Advanced Micro Devices, Inc.

- NVIDIA Corporation

- Taiwan Semiconductor Manufacturing Company Limited (TSMC)

- ASE Technology Holding Co., Ltd.

- Rambus Inc.

- Marvell Technology, Inc.

Need Deeper Insights or Custom Analysis?

Contact Blackwater Business Consulting to explore how this data can drive your strategic goals.